I see myself preparing my first blog post of 2025 almost after passing mid year and reflecting a relatively quiet year of public contributions in Information Security. This research was planned originally to be shared as a conference talk, but due to some unforeseen circumstances, it is now being shared as a blog post. The work I present is not groundbreaking; rather, it revisits a known issue through a modern lens, leveraging tools and techniques. Driven by curiosity and a passion for application and cloud security and connecting them, I’ve turned my attention to a lesser-explored corner of the cloud security.

Although major Cloud Service Providers (CSPs) like AWS, GCP, and Azure dominate most cloud security research, alternative providers such as DigitalOcean are equally critical, especially given their popularity among individuals, startups and SMEs. This security research focuses specifically on DigitalOcean’s Cloud DNS service, with the aim of examining how overlooked misconfigurations can still pose a real security threat. This isn’t the first time I’ve done something with DigitalOcean either; I previously shared a set of security best practices for the platform, available here in linkedIn. This time, however, I wanted to go deeper exploring the platform’s service or a specific component or a service comprehensively using all the resources and unlocking possibilities.

Research Context & Scope

This article builds on prior research that showed how sub/domains can become “orphaned” and hijackable when a cloud DNS service allows adding zones without verifying ownership. In those earlier blogs, posts and write-ups security researchers demonstrated that deleting a DNS zone from one account while leaving the nameserver (NS) records intact can allow anyone to claim the domain on that Cloud DNS management platform. My analysis follows the same basic idea but uses updated data, distinct approach and robust automation. I emphasize that the intent is constructive: to identify and highlight security gaps so they can be remediated. All probing was done using open-source intelligence and public DNS queries, and any sensitive findings will be responsibly disclosed. There was no intent to harm any individual, organization, or DigitalOcean itself.

DigitalOcean Cloud DNS as Research Focus

For this project I chose to focus specifically on DigitalOcean’s Cloud DNS service. DigitalOcean is a popular cloud provider among developers and SMEs, but it has received relatively little formal security research compared to giants like AWS, Google Cloud, and Azure etc. My reasoning included:

- DigitalOcean serves a large community of startups and developers, yet its DNS service has been under examined by researchers relative to other providers.

- Its DNS management interface is very easy to use and historically has lacked strict domain ownership verification, making it an interesting target for analysis.

- Based on my own experience with DigitalOcean’s infrastructure, I suspected that certain DNS misconfigurations could be present but overlooked.

By concentrating on DigitalOcean, I aimed to shed light on a lesser-explored cloud DNS service and understand what risks might exist for its users.

Introduction: The DNS Zone Takeover Problem

Dangling DNS (nameserver) records are surprisingly common yet often overlooked vulnerabilities. They occur when responsible parties forget to clean up or update DNS records in the domain registrar or Apex DNS control panel. These records can persist indefinitely as long as the domain remains active and DNS settings are left untouched.

Orphaned DNS records pose a probable threat, but they become dangerous once identified and exploited by malicious actors. Such exploitation can be used for unethical purposes including malvertising, phishing, chaining vulnerabilities, and other notorious activities.

The most dangerous thing about misconfigurations isn’t that they exist; it’s that they’re forgotten.

Cloud DNS service providers like AWS, Google Cloud, Azure(Partially) and others are generally less vulnerable to these issues. DNS zone takeovers are typically mitigated through robust security measures implemented by these providers. For example, they require ownership verification if a domain or subdomain was previously hosted on their platform. Additionally, they assign unique nameservers to each domain, nameservers that cannot be reissued or claimed by another user from a different account.

However, DigitalOcean Cloud DNS does not appear to have any protection mechanisms or safeguards in place to prevent this, apart from domain limits (i.e. The number of domains an individual can host). On the good side, their DNS service is free, allowing users to retain domains even if they are no longer actively using them. However, it doesn’t seem like most users take full advantage of this. Due to its popularity, ease of use and pricing I believe there is a high likelihood of finding domains still pointed to DigitalOcean nameservers, such as ns1.digitalocean.com and ns2.digitalocean.com but no longer claimed or actively managed within the DigitalOcean Cloud DNS service.

Unfortunately, this issue is often misunderstood, even within the tech and cybersecurity communities. Nameserver (DNS) record/zone takeovers are frequently misclassified as subdomain takeovers on bug bounty platforms and in general discussions among security researchers, leading to widespread confusion about the true nature of the problem.

Illustrative Scenario

To make the problem concrete, consider a hypothetical example: A company uses a domain called peepdi.com, which was purchased from GoDaddy, a domain registrar.

They use DigitalOcean Cloud DNS to manage the domain. The cloud/network team sets the domain’s authoritative nameservers to ns1.digitalocean.com and ns2.digitalocean.com in the domain registrar’s settings and create a DNS zone for the sub/domain in a DigitalOcean account.

Later, suppose the service associated with peepdi.com is shut down and the DigitalOcean account is deleted. If the DNS records at the registrar are not updated, the domain’s NS records would still point to DigitalOcean. In this state, the domain’s DNS is effectively orphaned and it still delegates authority to DigitalOcean but is no longer controlled by anyone.

An attacker who notices that peepdi.com still points to DigitalOcean nameservers could simply add that domain to their own DigitalOcean account. Once added, they would have full control of the domain’s DNS records, allowing them to redirect traffic, issue SSL certificates, host phishing sites, or perform other web-based attacks and chaining such as cookie stealing, setting up mail servers etc under that name.

Decommissioning a cloud DNS account or service without cleaning up DNS entries can create a dangling zone that is vulnerable to DNS zone takeover.

An additional point to note is while this example focuses on a domain, similar issues can occur with subdomains, especially when a subdomain is used to create a separate DNS zone. If you’re still unfamiliar with DNS takeovers, I highly recommend reading Project Discovery’s blog for a more in-depth explanation.

Methodology: Discovering and Exploiting Dangling NS Records

To establish a solid and well-defined methodology for identifying and exploiting dangling NS records, several critical components need to be addressed. This includes data collection, analysis processes, strategies for gathering large-scale proof of concept (PoC), information storage, responsible cleanup after takeover, and ensuring no harm is caused.

The goal here is NOT to automate bug bounty or find bugs, but rather to demonstrate the broader concept of Dangling DNS records. Upon re-evaluating the approach, I identified key elements that must be considered when attempting takeovers at scale, specifically for domains pointed to a DigitalOcean cloud DNS. A similar line of research was conducted by @IAmMandatory some time ago, but I believed the topic remained relevant and worth exploring further from a different angle.

| Key Challenges and Action Items | |

| 1. | Identifying sub/domains pointed to DO Cloud DNS that return SERVFAIL and REFUSED |

| 2. | Handling DigitalOcean’s limitations on the number of hosted domains per account |

| 3. | Collecting Proof of Concept (PoC) data and conducting detailed analysis |

| 4. | Reporting and proposing potential mitigation strategies |

At first glance, executing this may seem straightforward. However, when scaling the effort to over a million domains, the process becomes significantly more complex. It is not as simple as it may appear in this blog, as several adjustments and changes had to be made along the way.

Data Sources and Discovery Technique

The approach required sourcing a large volume of domain and subdomain data from across the internet. The goal was to compile a reliable dataset that included only those domains are in vulnerable state or pointed to DigitalOcean nameservers. The data sources used for the domain collection process include, but are not limited to, the following:

- Cisco Umbrella Popularity List – Ranked list of active domains, similar to Alexa.

- Silent Push API – Reverse NS lookups (limited access).

- Public crawlers and data dumps – Open data from passive DNS feeds and certificates.

- ICAN CZDS Data Registry – Zone files from participating TLDs.

- Bug Bounty/VDP Targets – Extracted sub/domains from public BB/VDPs.

- Rapid7 Project Sonar – (Access requested; no data provided.)

Collecting domain data and designing an effective methodology to perform large-scale takeovers was a time consuming task. It required significant effort and planning, but once the dataset was prepared, we were ready to proceed to the next step.

Overcoming DigitalOcean’s Domain Limitations for Scalable DNS Takeover Testing

DigitalOcean imposes a limit on the number of domains that can be hosted on a new account, typically no more than 10. Even after requesting an increase from their support team, the limit was raised to only 15–20 domains, which remained insufficient for performing multiple takeovers at scale.

To work around this limitation, I developed a strategy: add a domain, create and save the proof of concept (PoC), and then release the domain, all carried out in a subtle, non-anomalous manner to avoid detection by DigitalOcean. By adding domains or subdomains one at a time, performing the required operations, and then removing them, I was able to remain within platform limits while still successfully executing the task.

Proof of Concept (PoC) and Data Analysis

Due to DNS hosting limits on DigitalOcean, multiple takeovers could not be performed at once or in batches. This constraint left me with only a limited window to execute each PoC. The approach involved carrying out the takeover in a short time frame, typically by adding an A record to the domain, capturing DNS dig screenshots, or archiving the web response using services like the Wayback Machine.

Multithreading was not feasible due to rate limits imposed by APIs, so I opted for a slower, more deliberate approach to avoid detection or disruption. Each successful PoC was documented and stored in a NoSQL database, which allowed for efficient storage and flexible querying and visualization during the data analysis phase using the MongoDB Atlas Charts feature.

Reporting and Proposing the Mitigations

After completing the takeover process, it became evident that we would be left with a large volume of domain data. The challenge was how to structure this data in a meaningful and actionable way for reporting purposes.

For domains with available WHOIS information, that data serves as the primary source for identifying and contacting domain owners to inform them about the vulnerable state of their assets. If a domain is associated with an organization, reaching out through official channels is also a viable option. Another effective route is to notify the domain registrar directly, informing them that their customers domains are in vulnerable state and requires attention. There are multiple approaches to reporting, but selecting the most feasible and effective method is crucial for meaningful impact.

As for mitigation, DigitalOcean could implement controls similar to those used by other major cloud DNS providers like AWS and GCP. These typically involve checks to prevent the reuse of orphaned DNS zones or notify users when nameservers are still pointing to inactive services.

Further recommendations may arise after a complete analysis of the data. If more effective solutions become apparent, they will be proposed based on the insights gathered.

LET’S DO THIS !

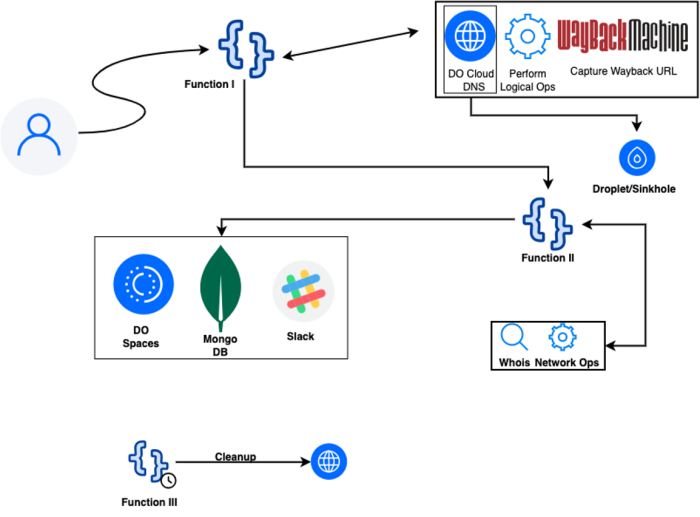

System Architecture Overview: Automation Workflow

While it may seem ironic, I deliberately chose to use DigitalOcean’s cloud platform to host the tools and services required for this research. This decision was made partly for convenience and partly to evaluate the platform’s behavior in a real-world takeover scenario. The availability of free trial with credits also contributed to this choice.

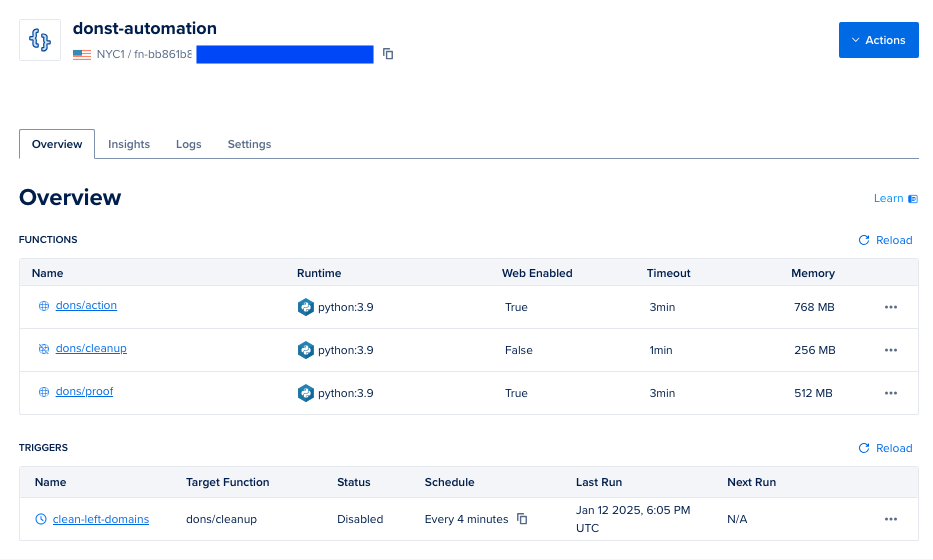

I refer to this project as DO edition of Teki1. It is through this system that I was able to automate domain takeovers, collect proof of concept (PoC) data, and subsequently release the domains.

To implement this workflow, I utilized DigitalOcean’s serverless offering, known as DO Functions. By orchestrating the process within a serverless framework, I successfully automated the operations. The choice to use serverless computing was driven not only by the opportunity to leverage free computing resources but also by a desire to assess the runtime security of the serverless environment.

The architectural design and operational flow of Teki are outlined in the flowchart provided below.

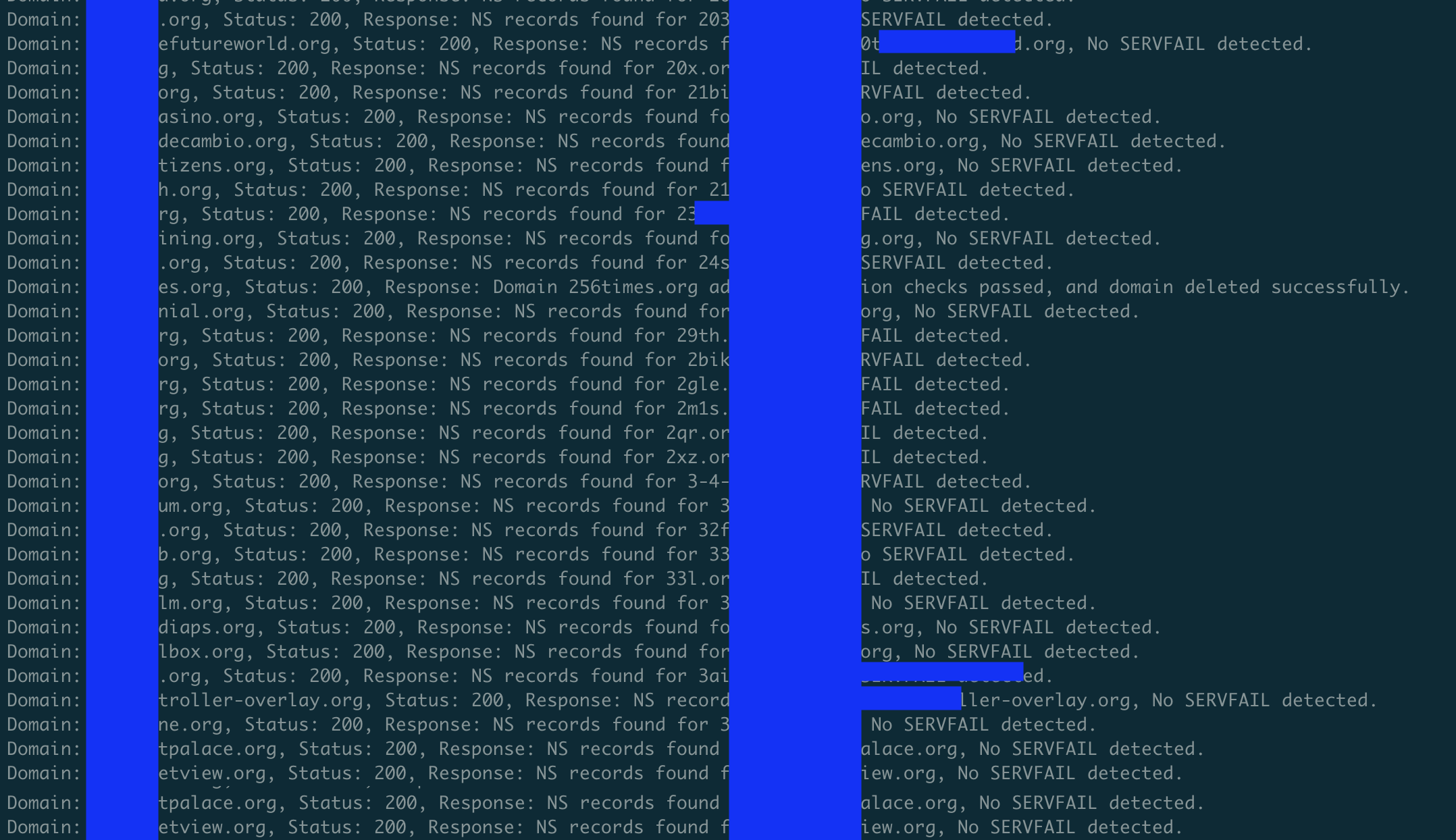

And the Serverless Function-III

The serverless Function-I takes input from the user via a parameter and first validates whether it is a valid domain using a provided regular expression and some additional logic. Once the domain is validated, it proceeds to look up the NS (nameserver) records using DNS utilities. This DNS lookup is performed using two different DNS resolvers: Google and DigitalOcean.

Based on the response to the DNS query:

- If the domain returns a NOERROR2 response, no further action is needed.

- If the response is SERVFAIL3 or NXDOMAIN4, further processing begins.

A NXDOMAIN response means that the domain does not exist, this could indicate that the domain has expired due to failed renewal, was never purchased, or simply does not exist. Function-I logs domains returning NXDOMAIN and moves on to the next target. For SERVFAIL domains, the function proceeds to the next step: adding the domain to the DigitalOcean Cloud DNS panel via API. While doing so, it also creates an A record pointing to the IP address of a Sinkhole Server.5

After the domain is added to the DNS panel, the script waits up to 10 seconds for propagation. If propagation is successful, it proceeds to verification, Once the domain is assumed to be propagated, verification begins to confirm that the domain is successfully hosted and under control. This involves two parallel tests:

1. Web Check

Since the domain is now pointed to the sinkhole server’s IP address, the function sends a HTTP GET request to the target domain. If the response body “w5h4d0w” matches the content set in the serverless code, the test is marked as PASS.

2. DNS Check

A dig request is made for the A record of the domain. If the returned IP address matches the IP of the sinkhole server, this test is also considered PASS.

To pass the verification stage, at least one of the two tests must succeed (logical OR). This is because, in some scenarios, the Sinkhole Server may be down, or DNS queries may be rate-limited or blocked. However, in most cases, both tests pass. If both tests fail, it means that although the domain was added to the DNS panel, it is not actually hosted (possibly due to mismatched nameservers). In that case, the domain is deleted from the DNS panel and processing is terminated. Upon successful verification, an initial Slack notification is sent.

After verification, the domain name is handed off from Serverless Function-I to Serverless Function-II, which performs the Proof of Concept (PoC). This process involves three actions:

- Saving the domain’s state at archive.org so it can be accessed later via the Wayback Machine.

- Taking a screenshot of the

digresponse and saving it as base64 data. - Retrieving the WHOIS technical contact email for the domain.

Once all information is gathered, it is saved in a MongoDB for statistical and analytical purposes and then a final webhook notification is also sent via Slack and cleanup is done.

The Serverless Function-III performs autoclean up incase of the domains are not deleted from the cloud DNS panel, which is absolutely essential. I ran into a bunch of issues during this experiment, mostly due to API failures or other unexpected reasons. To handle that, a third serverless function runs on a cron schedule, listing the domains in Cloud DNS and deleting them as needed.

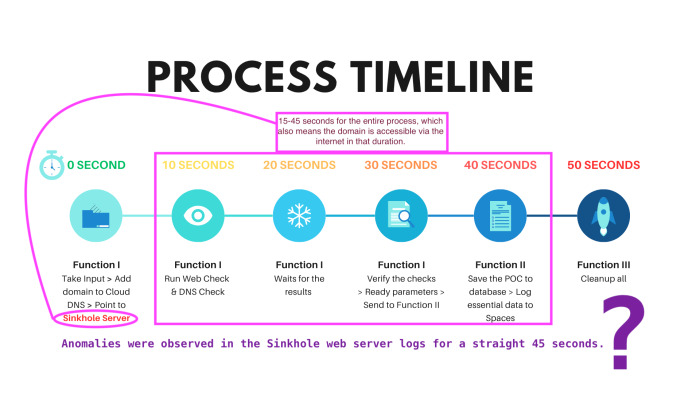

Below is the process timeline that demonstrates how Teki operates over time:

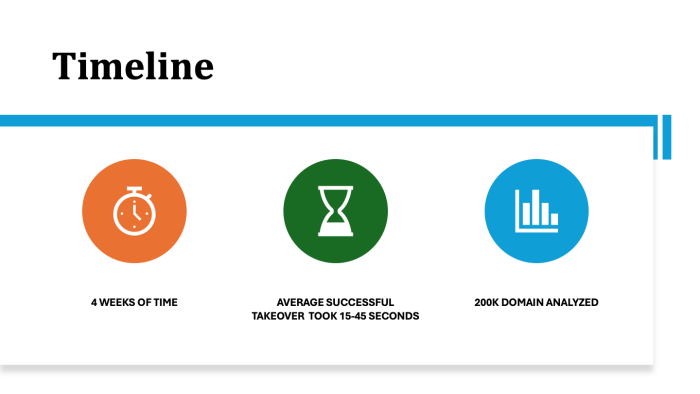

The takeover process takes approximately 15 to 45 seconds, depending on factors such as the response time from the DigitalOcean API, verification checks, and the processing time from the Wayback Machine.

TL;DR: What the Teki does?

| Function-I (Action) | Validate Target Takeover & Verify Get parameters for POC and pass to Function-II Cleanup |

| Function-II (POC) | Gets invoked from Function-I only after takeover Receive parameters from Function-I Get whois contact email of the domain Save Dig record’s screenshot Push information to MongoDB |

| Function-III (Ensure Cleanup) | Cleanup residue domains due to DO API error Run as cron job in every 240 seconds. |

Table: Role of different serverless functions for takeover operation.

If you’ve been paying close attention, you might be wondering how I managed to take a screenshot in a serverless environment, which should be a problem. And honestly, it was. But I came up with a weird little hack: instead of actually taking a screenshot, I fetched the text output from the dig request and rendered it onto a canvas styled to look like a terminal background. Then I exported it as a base64 image. So technically, we constructed the screenshot instead of capturing one, a pretty funny moment in my coding journey, but it worked!

The following architecture diagram is aligned with the underlying technology stack. Efforts have been made to present it in a minimalistic and high-level manner.

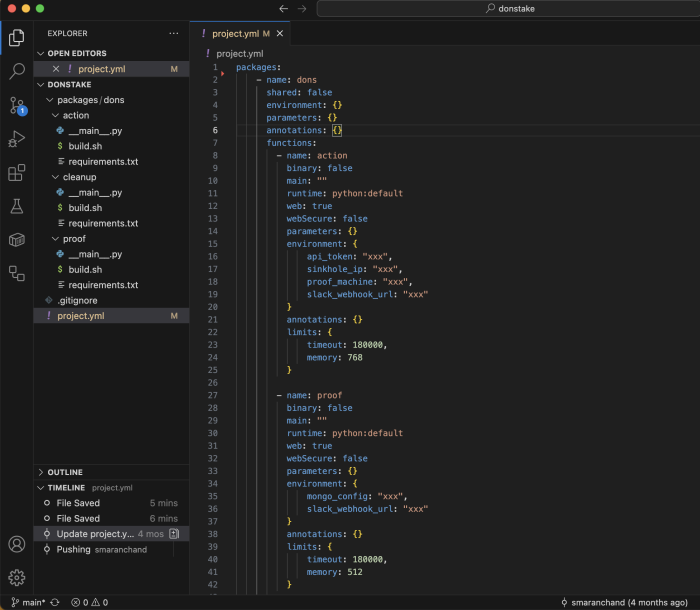

After designing the system and spending several hours coding through trial and error, I successfully deployed the serverless function. Due to certain constraints, I am unable to share the code for the serverless implementation. However, I believe the accompanying flowchart sufficiently illustrates the overall logic and structure of the assignment. Providing the actual code might not only diminish the learning value but could also raise ethical concerns.

Nonetheless, if you have a valid reason and would like to review the implementation, feel free to contact me.

To streamline the deployment process, I utilized the doctl CLI in conjunction with Terraform. The deployed infrastructure consists of three serverless functions, a DigitalOcean droplet aka Sinkhole Server and a DigitalOcean Spaces bucket used for logging purposes.

Results: Real-World Takeovers

Following the deployment of the resources, the serverless function operated as intended over a period of time. It is worth noting that the entire analysis process spanned approximately one month, which I found acceptable given that the system was consistently performing takeovers, generating proofs of concept, and handling cleanup operations effectively.

Although one of the key objectives of this research was to utilize the free tier of the serverless environment and the free database cluster from MongoDB.com, I was only partially successful, as I ultimately had to spend a small amount to complete the project.

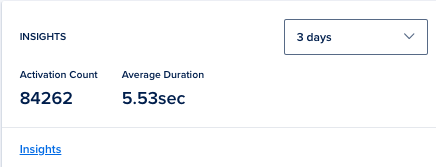

Below are the key insights gathered from the serverless functions:

Scale and Impact

A total of over one million domains were initially gathered. After preliminary validation, I sorted and selected 200,000 domains for further analysis. The scanning process ran for approximately one month, during which we identified a significant number of vulnerable domains. Unsurprisingly, around 40% of the domains that had authoritative nameservers pointing to DigitalOcean were found to be vulnerable.

This resulted in the successful hijacking of 40,084 domains that were pointed to DigitalOcean’s Cloud DNS service. For each of these domains, a proof of concept (PoC) was generated, after which they were responsibly reverted to their original state. The high success rate of these takeovers was unexpected and underscores the significant risk posed by this vulnerability. It highlights the potential for exploitation by malicious actors, given the scale and ease of abuse, making it a valuable target for misuse.

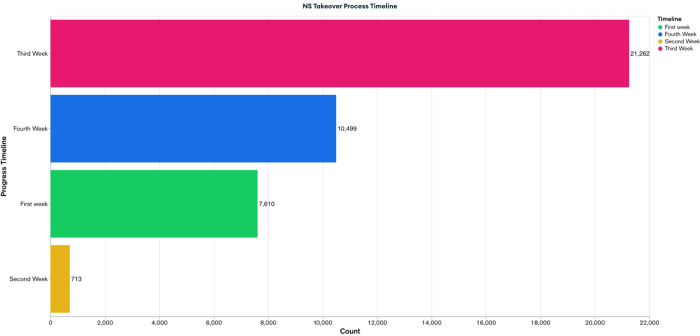

The timeline of this takeover process is illustrated in the following section, where the logs have been analyzed and presented in the form of a bar graph.

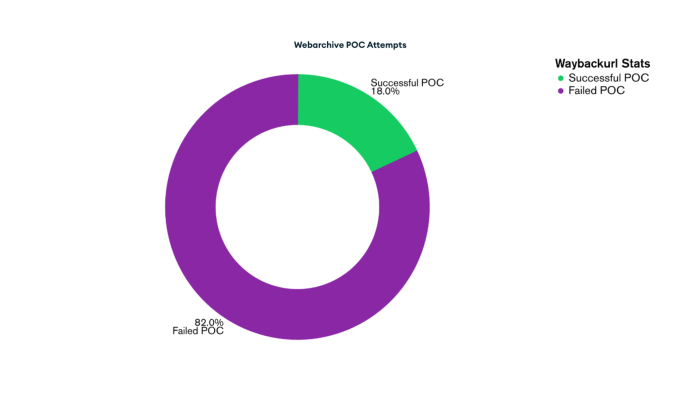

These are the attempts made by this edition of Teki. As previously discussed, while all DNS check PoCs were successful, the Web Archive submissions failed, likely due to IP-based rate limiting or heuristic analysis on Wayback Machine’s end. However, as shown on the screen, we were able to successfully save 20% of the PoCs on archive.org.

Tools, Libraries & Infrastructure

The architecture adopted for this analysis was intentionally minimalistic and serverless to ensure scalability and ease of deployment. The following tools and technologies were used across different components of the workflow:

Computing: Utilized DigitalOcean Functions to run serverless code, ensuring low operational overhead and flexibility.

Programming Language: Primary scripting and analysis were done using Python and Bash for automation and processing tasks.

Libraries: dnspython, cairocffi, dnslib, python-whois, pycparser, certifi, pymongo, idna, re, collections.Counter, matplotlib, seaborn

Storage: Static data and output files were stored in DigitalOcean Spaces, a scalable object storage solution.

Database: Domain metadata and POC results were stored in a NoSQL MongoDB instance from MongoDB Atlas.

Visualization: Used MongoDB Charts, Microsoft Excel, Goaccess, and Python plotting libraries for data visualization and insight generation.

Infrastructure Management: Deployment and configuration were managed using the Doctl CLI (DigitalOcean command-line tool) and Terraform for Infrastructure as Code (IaC).

AI Assistance: Leveraged OpenAI’s ChatGPT and Microsoft Copilot for research

Data Analysis

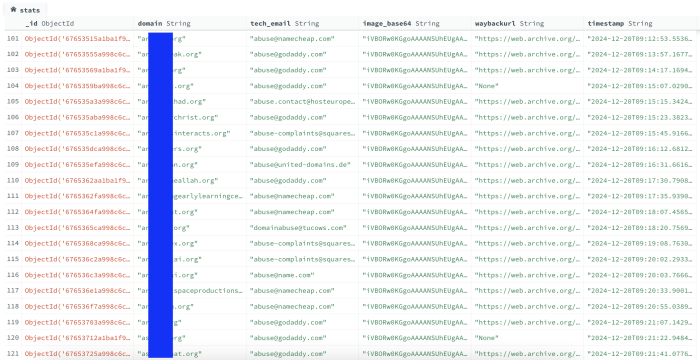

With all the insights and potential it offers, the data stored in our NoSQL database can be leveraged to uncover valuable information. While I am not a data analyst, I see numerous possibilities and patterns that could emerge through further analysis. The stored data includes details such as the domain name – domain, technical contact email – tech_email, dig screenshot – image_base64, the Wayback Machine URL – waybackurl, and a timestamp.

The tech_email represents the technical contact information of the domain owner, provided the WHOIS details are publicly accessible. This information can potentially lead to additional insights. The waybackurl field may be empty in cases where the attempt to archive the page was unsuccessful. I tried various strategies, such as rotating the User-Agent string and introducing delays (sleep), but they were not always effective. If you happen to know a better method for reliably archiving pages in scale, I would be glad to learn about it.

The image below illustrates the structure of the database, including the collection names and the data it contains:

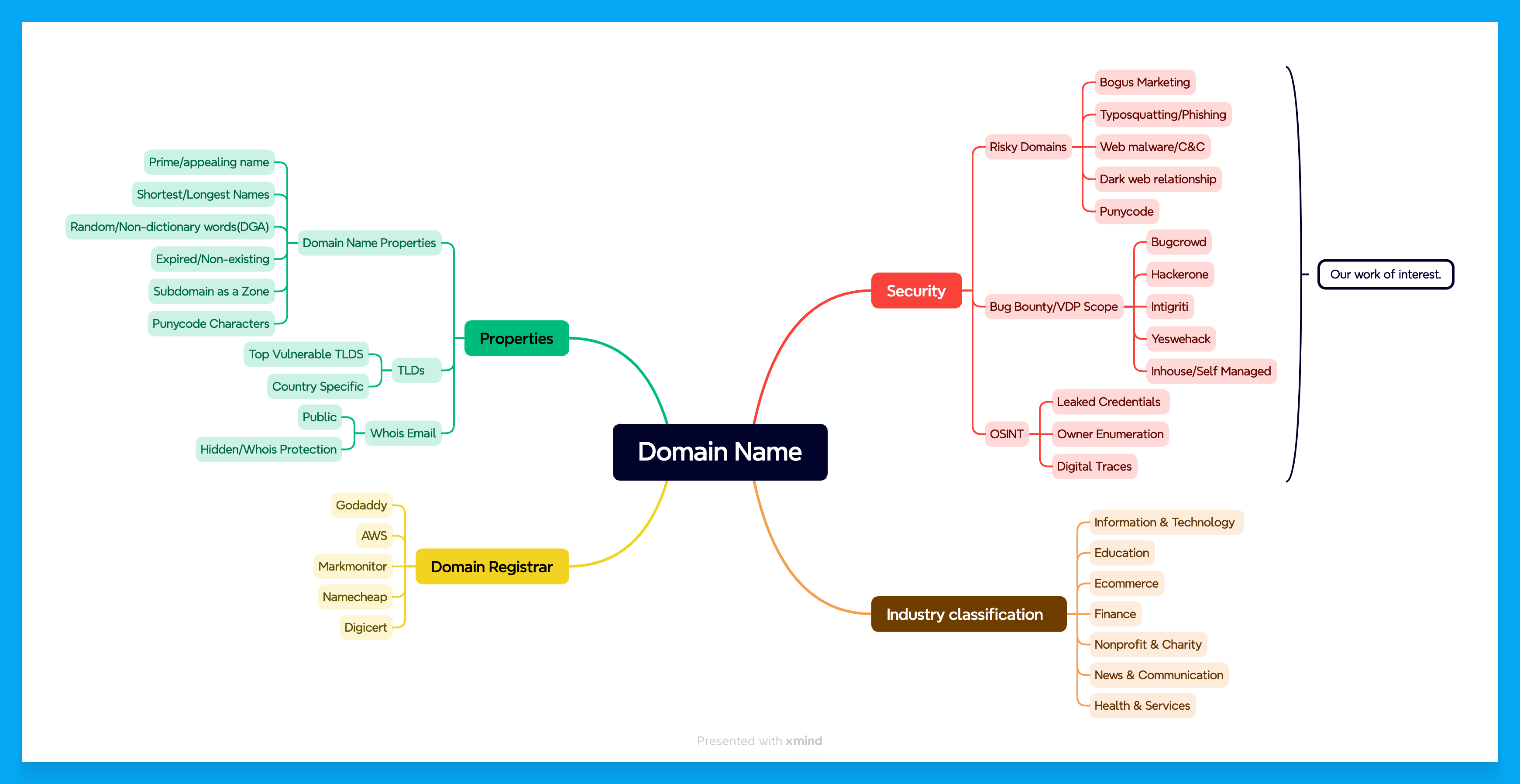

After a few hours of brainstorming, I created the following mind map, which outlines how this data can be utilized for research purposes and to uncover meaningful insights. The mind map below illustrates potential ways to leverage the available data for discovering interesting patterns and facts. Although i didn’t perform all of the analysis present in the mindmap but there are numerous possibilities with the data.

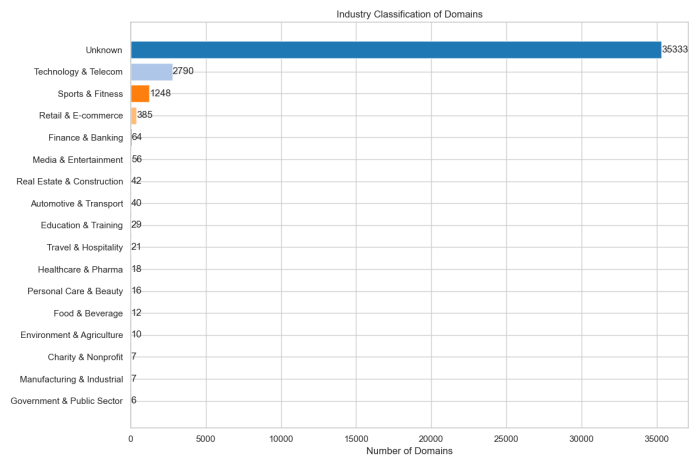

Industry Classification

With some carefully selected local keywords and Python libraries I performed an industry classification analysis on a list of domains. The goal was to categorize domains by broad industry sectors based on keyword matches in their names while this is not an approved method for the job.

The analysis revealed that a significant majority of domains (88.15%) fell into the Unknown category, indicating many domains did not match our predefined industry keywords. Among the identified categories, Technology & Telecom was the most prevalent at 6.96%, followed by Sports & Fitness (3.11%) and Retail & E-commerce (0.96%).

Other industries such as Finance & Banking, Media & Entertainment, Real Estate & Construction, and Automotive & Transport each represented less than 0.2% of the domains. Smaller proportions were seen in Education & Training, Travel & Hospitality, Healthcare & Pharma, Personal Care & Beauty, Food & Beverage, Environment & Agriculture, Charity & Nonprofit, Manufacturing & Industrial, and Government & Public Sector, all together comprising less than 1% of the dataset.

This classification provides a high-level overview of the domain landscape, highlighting the dominance of technology-related domains and the large share of uncategorized or potentially niche domains.This also raises a significant concern: if these domains reflect vulnerable or risky entities, the Technology & Telecom sector being the largest identified group may represent the most vulnerable industry itself. This underscores the need for security focus within the tech sector.

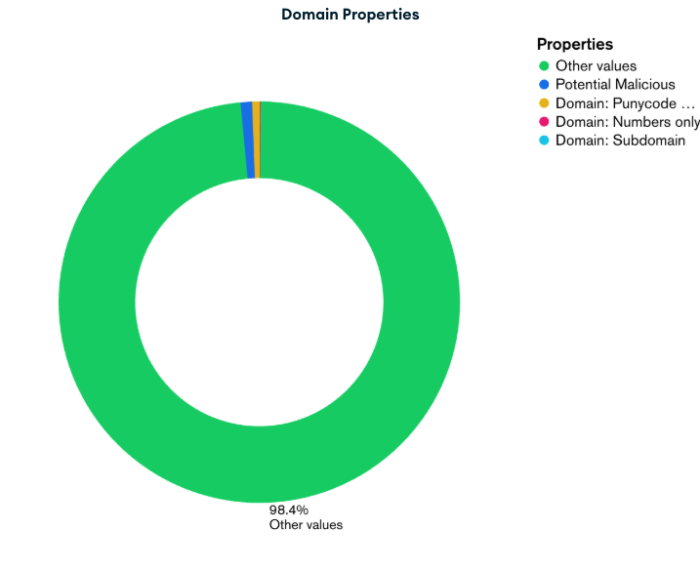

Domain Properties

Additional analysis was performed on various domain properties to identify potentially suspicious or malicious characteristics. This included detecting:

- Potentially malicious or phishing-like domains, such as lookalikes of popular brands (e.g.,

facebo0k.com,gooogle.net) - Domains containing Punycode characters, which are often used in Homograph Attacks6

- Domains composed of numbers only

- Domains using subdomains as the primary hosted (apex) zone

Surprisingly, the analysis found that:

- 0.72% of domains exhibited potentially malicious or phishing-like patterns

- 0.51% contained Punycode characters

- 0.15% were numeric-only domains

- 0.12% used subdomains as apex zones

Meanwhile, 98.4% of the domains did not match any of these suspicious patterns based on the current detection logic. This suggests either a relatively low prevalence of overtly suspicious domains or limitations in the current analysis approach, highlighting the need for more advanced detection techniques in future studies.

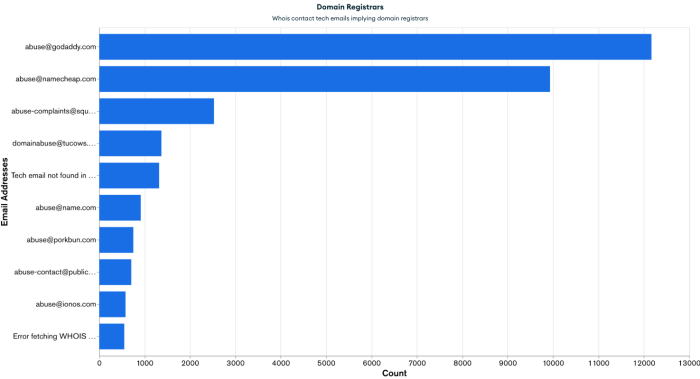

Top Vulnerable Domain Registrars & Public WHOIS Data

While analyzing WHOIS technical contact emails, I observed that most domains had WHOIS privacy protection enabled. However, many still displayed tech contact addresses such as [email protected] and [email protected]. Based on these recurring patterns, I was able to associate vulnerable domains with their respective registrars.

This correlation does not necessarily indicate that these registrars are the most vulnerable; rather, it likely reflects their large market share. I observed that GoDaddy had the highest number of vulnerable domains, with over 12,000, followed by Namecheap, which accounted for nearly 10,000. These figures are consistent with their dominance in the domain registration industry.

That said, I believe domain registrars can take a more proactive role in mitigating DNS zone takeover risks. If a registrar detects that a domain has authoritative nameservers configured but no active zone being served, it could implement mechanisms to flag the issue or notify the domain owner. Registrar-level checks and alerts would significantly reduce the likelihood of such domains being exploited due to dangling DNS configurations.

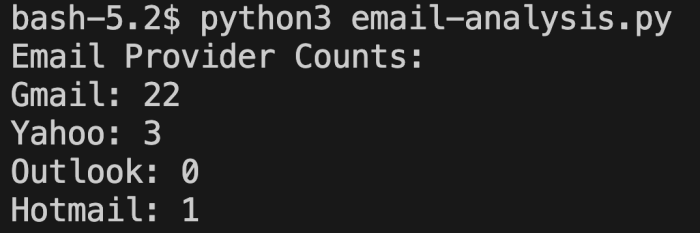

Public Email Addresses

From the same dataset, I was also able to extract a small number of public email addresses belonging to users or organizations, excluding registrar-related contacts. However, the widespread use of WHOIS privacy protection significantly limited this effort.

Fewer than 30 email addresses were publicly available. This trend introduces a clear challenge as it becomes increasingly difficult to contact domain owners directly to inform them about potential vulnerabilities or to responsibly disclose security concerns.

Interesting Findings

Apart from the domain takeovers and data analysis, several other interesting findings emerged during the course of this research. Although these insights were not part of the initial objective, they revealed unexpected areas of concern and added depth to the overall investigation.

DO internal Domain Takeover

Since we were running the scan at scale, which included all domains associated with DigitalOcean’s Cloud DNS service, it was likely that DigitalOcean had also used this service for some of its own infrastructure. As a result, Teki scanned and successfully identified a domain that belonged to DigitalOcean. The domain was subsequently hijacked as part of the testing process. This issue was reported through their responsible disclosure program, and DigitalOcean acknowledged the report, fixed the issue, and awarded a bounty in recognition.

Software Supply Chain Hijack

Obviously out of curiosity i analyzed the web server logs of the Sinkhole Server and surprisingly found something strange that i never expected.

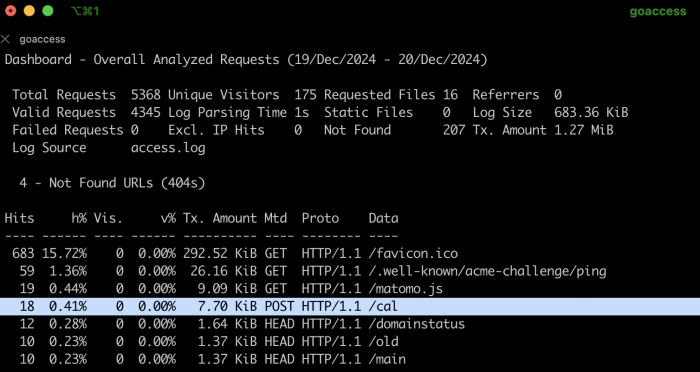

The duration of a complete takeover process is approximately 15 to 45 seconds. This represents the maximum window during which the domain becomes accessible from the internet immediately during the takeover and POC process. For analysis purposes, we can assume a maximum window of 60 seconds. During this timeframe, I discovered some HTTP POST requests made to the endpoint vulnerabledomain.com/cal.

Upon analyzing the source IPs of the requests, I identified seven different websites that were actively making POST requests to the domain I had taken over. Further investigation revealed that these websites were developed by the same vendor for multiple clients. Each of them was configured to send POST requests for logging or user analytics purposes. Interestingly, the server designated to receive these logs (the “ingester”) was no longer active on DigitalOcean, indicating that the domain had had been abandoned and i happened to took over that.

However, as soon as Teki hosted the domain, it immediately received 18 hits within a 45-second window. The /cal endpoint received data in the form of heartbeat=true&time=timestamp&domain=domain_name. While in this case the compromised domain was only receiving data, it highlights a broader concern: if the data had also been consumed or acted upon by the vendor’s client-side code, it would have been possible to manipulate or exfiltrate sensitive information. This illustrates how an attacker could passively collect analytics data or worse after compromising a single abandoned vendor-controlled domain.

I tried to contact the vendor about the but it seemed they had closed their business lately and authoritative person were not found what a “dreadlock of supply chain issue“, but for the best part i communicated with them as part of my ethical hacker responsibilities, It’s worth mentioning how vendor’s mistake can be a deadly and is a biggest problem i would suggest you to have a look at my this article. The Organization, Vendor & Application Security

Bug Bounty & VDP Reports

Finding vulnerable domains that pointed to DigitalOcean was quite unlikely, given the scale and the competition in the bug bounty space. I assume I was not the only one running these scans, and naturally, there is always a race to discover and report valid issues. Despite the odds, I was excited to uncover 11 domains that were actually in scope under various self-managed VDP and bug bounty programs. Out of roughly 40,000 domains scanned, this accounts for just 0.0275 %. It was a small number, but still a fascinating result. All of these findings were reported through the proper channels.

Hackers Infrastructure Takeover

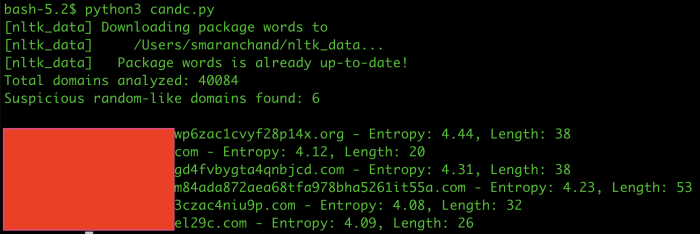

To identify domains potentially used in malware Command and Control infrastructure, I analyzed domain name patterns using Python libraries and statistical methods. Using the Natural Language Toolkit library, I compared domain segments against a large corpus of English words to distinguish meaningful content from random strings. Simultaneously, I calculated the Shannon entropy of each domain’s main label with a function to measure character randomness.

Higher entropy values indicate greater randomness, typical of algorithmically generated malware domains, while lower values suggest meaningful sequences. By combining entropy and dictionary checks, I flagged domains with high randomness and no English words as suspicious. This approach proved effective in detecting pseudo-random domains used in malware Command and Control communication. Out of six such discovered domains, three were confirmed as part of C&C infrastructure and were flagged by malware detection tools.

Post-Takeover Observations and Disclosure

It is important to note that no customer data was harmed or accessed during the course of this research. However, following the successful DNS zone takeovers, proof-of-concept validations, and data analysis, one critical question remained: how aware are organizations and individuals of such vulnerabilities, and how long would it take them to detect and remediate the issue? More importantly, could similar takeovers be performed by someone with malicious intent?

To explore the broader implications, I sent HTTP GET requests to a random sample of 1,000 domains to observe their response codes, page titles, and the IP addresses they resolved to.

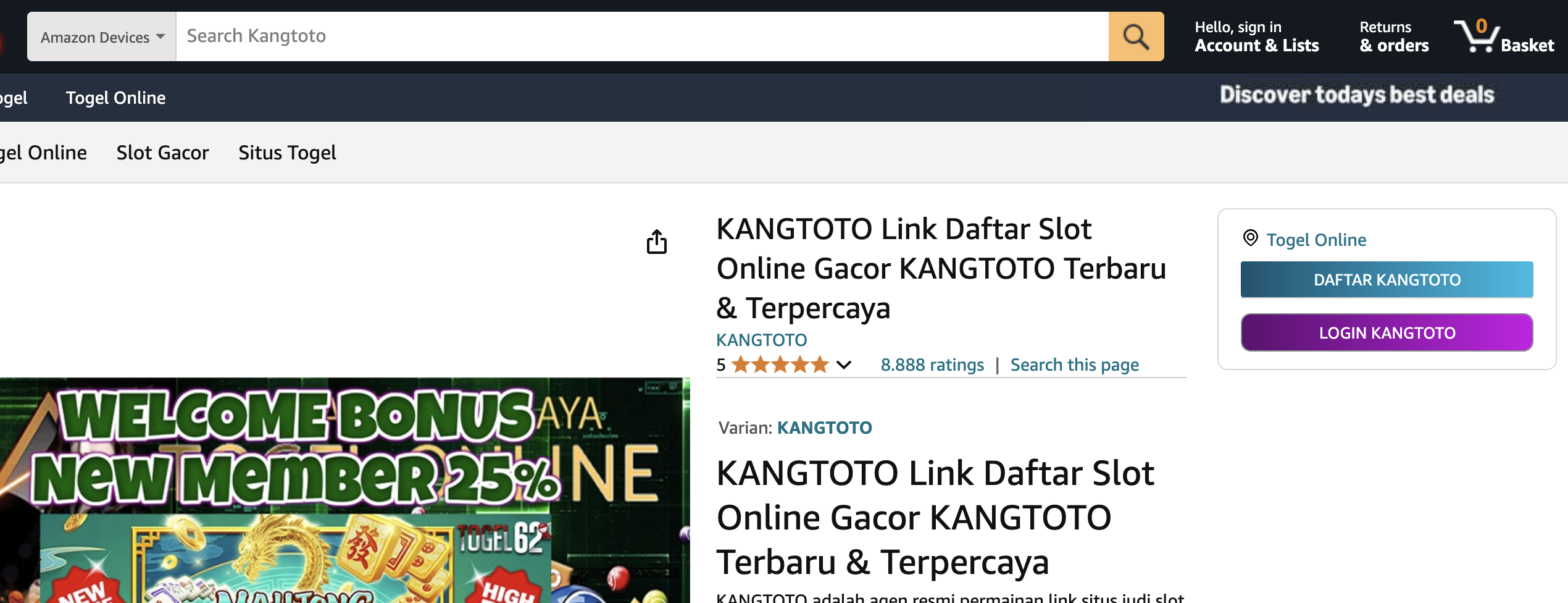

Among the domains that previously returned a SERVFAIL status during DNS resolution, 4.1 percent (41 out of 1,000) were found to be active or serving some form of content. Since this analysis was conducted manually and based on a random sample, no formal methodology was applied to determine whether the observed responses were the result of legitimate DNS recovery, unauthorized takeovers or misconfigurations.

However, several domains appeared to be hosting suspicious or harmful content, including malvertising, phishing pages, SEO spam, and in some cases, websites related to online casinos, betting platforms, scam promotions, and other forms of deceptive marketing. These findings suggest that a portion of these previously dangling domains may have been opportunistically claimed by malicious actors or are now being used as part of automated exploitation and monetization campaigns.

Conclusion: Core Security Gap and Recommendations

The use of cloud services introduces a complex set of challenges, particularly around the protection of data and infrastructure. Under the shared responsibility model, it is ultimately the user’s duty to secure the resources they deploy in the cloud. While DigitalOcean functions as a cloud infrastructure provider, it does not operate as a domain registrar, introducing additional complications when DNS configurations are left unattended.

One key recommendation is for domain registrars to implement mechanisms to detect abandoned or misconfigured domains, particularly those returning SERVFAIL responses due to dangling nameservers. By proactively identifying such cases, registrars could play a meaningful role in reducing the risk of DNS zone takeovers.

If you have research ideas or see opportunities for collaboration where my expertise aligns, feel free to reach out to me via X. I welcome the chance to connect and explore new avenues. Additionally, if you have feedback on this article or the methods discussed, please don’t hesitate to share your thoughts.

Shoutout to my friend Ankit Pandey for bringing some fantastic ideas to the table while we brainstormed, much appreciated and Kudos!

Signing off until the next solid research breakthrough!

- Teki: The name assigned by the author to the custom automation used for DNS zone takeover operations. It orchestrates domain validation, takeover attempts, verification, and cleanup processes in an automated and scalable manner. ↩︎

- NOERROR: A standard DNS response code indicating that the DNS query was successfully processed and the domain exists. This response means the nameserver was able to resolve the query, even if the specific record type requested (e.g., A, MX, TXT) is not present. It confirms that the domain is valid and properly configured in the DNS system. ↩︎

- SERVFAIL: A DNS response code indicating a server failure. It means the DNS resolver or authoritative nameserver encountered an error while processing the query but cannot provide specific details. In the context of dangling DNS zones, a SERVFAIL response often suggests that the nameserver is still delegated but the corresponding zone no longer exists or is misconfigured on the authoritative server. ↩︎

- NXDOMAIN: A DNS response code that stands for “Non-Existent Domain.” It indicates that the queried domain name does not exist in the DNS. This typically occurs when a domain has expired, has not been registered, or is incorrectly typed. ↩︎

- Sinkhole Server: A web server configured to serve specific content, typically used for verification or analysis. Its IP address is added as an A record to the vulnerable domain in order to confirm DNS control during the takeover process. ↩︎

- Homograph Attack: An IDN homograph attack is when a fake website uses look-alike characters from other languages to mimic a real website’s domain name, tricking users into thinking it’s the real one ↩︎

Samaj me nhai aaya lekin padke accha laga